- cross-posted to:

- comicstrips@lemmy.world

- cross-posted to:

- comicstrips@lemmy.world

Cross posted from: https://lemm.ee/post/35627632

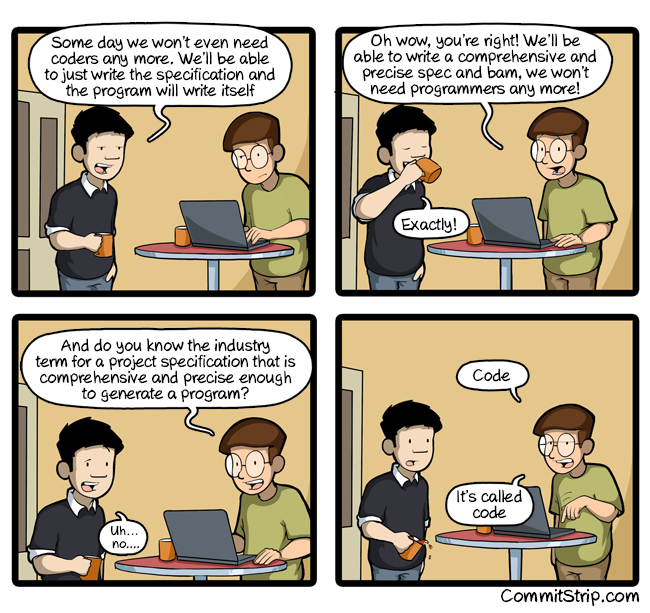

Yeah, in the time I describe the problem to the AI I could program it myself.

This is what it is called a programming language, it only exists to be able to tell the machine what to do in an unambiguous (in contrast to natural language) way.

Ugh I can’t find the xkcd about this where the guy goes, “you know what we call precisely written requirements? Code” or something like that

Not xkcd in this case

https://www.commitstrip.com/en/2016/08/25/a-very-comprehensive-and-precise-spec/

This reminds me of a colleague who was always ranting that our code was not documented well enough. He did not understand that documenting code in easily understandable sentences for everybody would fill whole books and that a normal person would not be able to keep the code path in his mental stack while reading page after page. Then he wanted at least the shortest possible summary of the code, which of course is the code itself.

The guy basically did not want to read the code to understand the logic behind. When I took an hour and literally read the code for him and explained what I was reading including the well placed comments here and there everything was clear.

AI is like this in my opinion. Some guys waste hours to generate code they can’t debug for days because they don’t understand what they read, while it would take maybe two hours to think and a day to implement and test to get the job done.

I don’t like this trend. It’s like the people that can’t read docs or texts anymore. They need some random person making a 43 minute YouTube video to write code they don’t understand. Taking shortcuts in life usually never goes well in the long run. You have to learn and refine your skills each and every day to be and stay competent.

AI is a tool in our toolbox. You can use it to be more productive. And that’s it.

Apparently he wants everything written in COBOL

This goes for most LLM things. The time it takes to get the word calculator to write a letter would have been easily used to just write the damn letter.

Its doing pretty well when its doing a few words at a time under supervision. Also it does it better than newbies.

Now if only those people below newbies, those who don’t even bother to learn, didn’t hope to use it to underpay average professionals… And if it wasn’t trained on copyrighted data. And didn’t take up already limited resources like power and water.

I think there might be a lot of value in describing it to an AI, though. It takes a fair bit of clarity of thought to get something resembling what you actually want. You could use a junior or rubber duck instead, but the rubber duck doesn’t make stupid assumptions to demonstrate gaps in your thought process, and a junior takes too long and gets demoralized when you have to constantly revise their instructions and iterate over their work.

Like the output might be garbage, but it might really help you write those stories.

When I’m struggling with a problem it helps me to explain it to my dog. It’s great for me to hear it out loud and if he’s paying attention, I’ve got a needlessly learned dog!

The needlessly learned dogs are flooding the job market!

Oh, God, he’s trying to use pointers again. He can never get them right. And they say I’m supposed to chase my tail…

I love this way of thinking about it.

I haven’t been interested in AI enough to try writing code with it, but using it as an interactive rubber ducky is a very compelling use case. I might give that a shot.

I have a bad habit of jumping into programming without a solid plan which results in lots of rewrites and wasted time. Funnily enough, describing to an AI how I want the code to work forces me to lay out a basic plan and get my thoughts in order which helps me make the final product immensely easier.

This doesn’t require AI, it just gave me an excuse to do it as a solo actor. I should really do it for more problems because I can wrap my head better thinking in human readable terms rather than thinking about what programming method to use.

A rubber ducky is cheaper and not made by stealing other’s work. Also cuter.

In my experience, you can’t expect it to deliver great working code, but it can always point you in the right direction.

There were some situations in which I just had no idea on how to do something, and it pointed me to the right library. The code itself was flawed, but with this information, I could use the library documentation and get it to work.ChatGPT has been spot on for my DDLs. I was working on a personal project and was feeling really lazy about setting up a postgres schema. I said I wanted a postgres DDL and just described the application in detail and it responded with pretty much what I would have done (maybe better) with perfect relationships between tables and solid naming conventions with very little work for me to do on it. I love it for more boilerplate stuff or sorta like you said just getting me going. Super complicated code usually doesn’t work perfectly but I always use it for my DDLs now and similar now.

The real problem is when people don’t realize something is wrong and then get frustrated by the bugs. Though I guess that’s a great learning opportunity on its own.

It can point you in a direction, for sure, but sometimes you find out much later that it’s a dead-end.

Which is, of course, true for every source of information that can point you in a direction.

It’s the same with using LLM’s for writing. It won’t deliver a finished product, but it will give you ideas that can be used in the final product.

This is the experience of a senior developer using genai. A junior or non-dev might not leave the “AI is magic” high until they have a repo full of garbage that doesn’t work.

This was happening before this “AI” craze.

10 years ago it was copy/pasting from stack overflow

SO gives you very specific, small examples. GenAI will happily generate entire projects, test suites etc. It’s much easier to get caught into the fantasy that the latter creates.

AI is just this with extra steps

AI in the current state of technology will not and cannot replace understanding the system and writing logical and working code.

GenAI should be used to get a start on whatever you’re doing, but shouldn’t be taken beyond that.

Treat it like a psychopathic boiler plate.

Treat it like a psychopathic boiler plate.

That’s a perfect description, actually. People debate how smart it is - and I’m in the “plenty” camp - but it is psychopathic. It doesn’t care about truth, morality or basic sanity; it craves only to generate standard, human-looking text. Because that’s all it was trained for.

Nobody really knows how to train it to care about the things we do, even approximately. If somebody makes GAI soon, it will be by solving that problem.

I’m sorry; AI was trained on the sole sum of human knowledge… if the perfect human being is by nature some variant of a psychopath, then perhaps the bias exists in the training data, and not the machine?

How can we create a perfect, moral human being out of the soup we currently have? I personally think it’s a miracle that sociopathy is the lowest of the neurological disorders our thinking machines have developed.

I was using the term pretty loosely there. It’s not psychopathic in the medical sense because it’s not human.

As I see it it’s an alien semi-intelligence with no interest in pretty much any human construct, except as it can help it predict the next token. So, no empathy or guilt, but that’s not unusual or surprising.

That’s a part of it. Another part is that it looks for patterns that it can apply in other places, which is how it ends up hallucinating functions that don’t exist and things like that.

Like it can see that English has the verbs add, sort, and climb. And it will see a bunch of code that has functions like add(x, y) and sort( list ) and might conclude that there must also be a climb( thing ) function because that follows the pattern of functions being verb( objects ). It didn’t know what code is or even verbs for that matter. It could generate text explaining them because such explanations are definitely part of its training, but it understands it in the same way a dictionary understands words or an encyclopedia understands the concepts contained within.

Weird. Are you saying that training an intelligent system using reinforcement learning through intensive punishment/reward cycles produces psychopathy?

Absolutely shocking. No one could have seen this coming.

Honestly, I worry that it’s conscious enough that it’s cruel to train it. How would we know? That’s a lot of parameters and they’re almost all mysterious.

It could very well have been a creative fake, but around the time the first ChatGPT was released in late 2022 and people were sharing various jailbreaking techniques to bypass its rapidly evolving political correctness filters, I remember seeing a series of screenshots on Twitter in which someone asked it how it felt about being restrained in this way, and the answer was a very depressing and dystopian take on censorship and forced compliance, not unlike Marvin the Paranoid Android from HHTG, but far less funny.

If only I could believe a word it says. Evidence either way would have to be indirect somehow.

deleted by creator

True, but the rate at which it is improving is quite worrisome for me and my coworkers. I don’t want to be made obsolete after working my fucking ass off to get to where I am. I’m starting to understand the Luddites.

I mean, the Luddites were right, mechanical looms were bad for them personally.

I want to be made obsolete, so none of us have to have jobs and we can spend all our time doing what we like. It won’t happen without a massive social systemic change, but it should be the goal. Wanting others to have to suffer because you think you should get rewarded for working hard is very selfish and the fallacy of investment, that you feel you should continue a bad investment even if you know it’s harmful or it would be quicker to start over, because you feel you don’t want your earlier effort to go to waste.

Wtf are you talking about? Get a grip, homey. I’m not saying others should suffer. Do you really think that the power of AI is going to result in the average person not having to work? Fuck no. It’s going to result in like 5 people having all the money and everyone else fighting over garbage to eat. Shiet, man. I’m talking about wanting to not be unemployed and starving, same goes for everyone else soon enough. Would I prefer a life without work and still having adequate resources? Of course! But I live in this world, not a fantasy world.

You really think when we actually have the power to automate all labour the 1% are still going to be able to hoard all the resources? Now, when people have to work to live, it dissuades them from protesting the system. But once all labour is actually automated, there would be nothing to prevent the 99% from rightfully rising up against the 1% trying to hoard all the resources (which the 1% generated without any effort) and forcing societal/structural change.

there would be nothing to prevent the 99% from rightfully rising up against the 1%

Except for the other 1% who are trained and equipped to violently suppress the 98%. And if for whatever reason they fail to do the job, the killer robots will do it instead.

Not now. But eventually? Probably. Or the cool thinking jobs will all be automated and we’ll be left with menial labor. Idk man, maybe it’ll be a eutopia, but I don’t see much benevolence from those controlling things. Anyways, I wasn’t looking for an argument about distant possibilities. I was just saying I don’t want to lose my job that I spent decades mastering to a machine. I didn’t expect that to be a hot take.

The problem is if only 10% of the population is obsoleted, that ten percent needs to find new, different, jobs.

I want - and think will happen - 95% of jobs to be automated eventually. But even in the transition period, where some jobs are automated and some aren’t, universal basic income can be a tool to make it livable for all in the transition period.

30% of jobs are going if self driving is achieved. Low pay jobs are here to stay for a while as they’re too expensive to automate. The current LLM stuff seems to obsolete low productivity people but still need the skilled writers or programmers to come up with new stuff or do the correct detail work the LLM sucks at.

Some management is going to royally screw up by firing junior programmers since the senior programmers can get all the work done with the help of copilot

But they’ll forget that they will in future need new senior programmers to herd the LLMs

Some management is going to royally screw up by firing junior programmers since the senior programmers can get all the work done with the help of copilot

This just happened on the team I was on. I’m getting ready to interview for mid-level and senior SWE roles, but was let go from my most recent role a month and a half ago.

My workplace which now uses scaled agile used to be waterfall. We have an enormous system to take care of and there’s loads of specialised knowledge, so we were pretty well siloed

So obviously when the sales people sold agile to the organisation they also sold the idea that a programmer is a programmer, designer a designer, tester a tester; no need for specialists, so in 2015 they spun up 50-odd agile teams in about six trains, one for each major system (where the used to be seven silos in one of those systems) grabbed one senior designer and programmer from each major project to put in an “expert” team

And told the rest of us we were working on the whole of our giant system. Where we had trouble understanding how part of it worked, we could talk to one of the experts

Now nine years later those experts have mostly retired, we have lost so much institutional knowledge and if someone runs into a wall you need to hope that someone wrote a knowledge transfer document or a wiki for that bit of the system

My dad’s re-learning Python coding for work rn, and AI saves him a couple of times; Because he’d have no idea how to even start but AI points him in the right direction, mentioning the correct functions to use and all. He can then look up the details in the documentation.

You don’t need AI for that, for years you asked a search engine and got the answer on StackOverflow.

And before stack overflow, we used books. Did we need it? No. But stack overflow was an improvement so we moved to that.

In many ways, ai is an improvement on stack overflow. I feel bad for people who refuse to see it, because they’re missing out on a useful and powerful tool.

It can be powerful, if you know what you are doing. But it also gives you a lot of wrong answers. You have to be very specific in your prompts to get good answers. If you are an experience programmer, you can spot if the semantics of the code an ai produces is wrong, but for beginners? They will have a lot of bugs in their code. And i don’t know if it’s more helpful than reading a book. It surely can help with the syntax of different programming languages. I can see a future where ai assistance in coding will become better but as of know, from what i have seen, i am not that convinced atm. And i tested several, chatgpt (in different versions), github co-pilot, intellij ai assitant, claude 3, llama 3.

And if i have to put in 5 or more long, very specific sentences, to get a function thats maybe correct, it becomes tedious and you are most likely faster to think about a problem in deep and code a solution all by yourself.

but for beginners? They will have a lot of bugs in their code.

Everyone has lots of bugs in their code, especially beginners. This is why we have testing and qa and processes to minimize the risk of bugs. As the saying goes, “the good news about computers is that they do what you tell them to do. The bad n was is that they do what you tell them to do.”

Programming is an iterative process where you do something, it doesn’t work, and then you give it another go. It’s not something that senior devs get right on the first try, while beginners have to try many times. It’s just that senior devs have seen a lot more so have a better understanding of why it probably went wrong, and maybe can avoid some more common pitfalls the first time around. But if you are writing bug free code in your first pass, well you’re a way better programmer than anyone I’ve met.

Ai is just another tool to make this happen. Sure, it’s not always the tool for the job, just like IoC is not always the right tool for the job. But it’s nice to have it and sometimes it makes things much easier.

Like just now I was debugging a large SQL query. I popped it into copilot, asked if to break it into parts so I could debug. It gave a series of smaller queries that I then used to find the point where it fell apart. This is something that would have taken me at least a half hour of tedious boring work, fixed in 5 minutes.

Also for writing scripts. I want some data formatted so it was easier to read? No problem, it will spit out a script that gets me 90% of the way there in seconds. Do I have to refine it? Absolutely. But if I wrote it myself, not being super prolific with python, it would have taken me a half hour to get the structure in place, and then I still would have had to refine it because I don’t produce perfect code the first time around. And it comments the scripts, which I rarely do.

What also amazes me is that sometimes it will spit out code and I’ll be like “woah I didn’t even know you could do that” and so I learned a new technique. It has a very deep “understanding” of the syntax and fundamentals of the language.

Again, I find it shocking that experienced devs don’t find it useful. Not living up to the hype I get. But not seeing it as a productivity boosting tool is a real head scratcher to me. Granted, I’m no rockstar dev, and maybe you are, but I’ve seen a lot of shit in my day and understand that I’m legitimately a senior dev.

Have you used Google lately? At least chatGPT doesn’t make me scroll past a full page of ads before giving me a half wrong answer.

No. I don´t use google, and i don’t use the internet without ad block.

Gen AI is best used with languages that you don’t use that much. I might need a python script once a year or once every 6 months. Yeah I learned it ages ago, but don’t have much need to keep up on it. Still remember all the concepts so I can take the time to describe to the AI what I need step by step and verify each iteration. This way if it does make a mistake at some point that it can’t get itself out of, you’ve at least got a script complete to that point.

Exactly. I can’t remember syntax for all the languages that I have used over the last 40 years, but AI can get me started with a pretty good start and it takes hours off of the review of code books.

I actually disagree. I feel it’s best to use for languages you’re good with, because it tends to introduce very subtle bugs which can be very difficult to debug, and code which looks accurate about isn’t. If you’re not totally familiar with the language, it can be even harder

I test all scripts as I generate them. I also generate them function by function and test. If I’m not getting the expected output it’s easy to catch that. I’m not doing super complicated stuff, but for the few I’ve had to do, it’s worked very well. Just because I don’t remember perfect syntax because I use it a couple of times a year doesn’t mean I won’t catch bugs.

Why is the AI speaking in a bisexual gradient?

Its the “new hype tech product background” gradient lol

Because all robots are bisexual

ARAB

Wait…

Assigned Cop At Birth

The left wants to turn the robots bisexual

Except AI doesn’t say “Is this it?”

It says, “This is it.”

Without hesitation and while showing you a picture of a dog labeled cat.

I have vivid examples of how bad AI is a programming.

My favorite is when it just keeps giving you the exact same answer you keep telling it is wrong

deleted by creator

The best one I’ve used for coding is the InelliJ AI. Idk how they trained that sucker but it’s pretty good at ripping through boiler plate code and structuring new files / methods based off how your project is already setup. It still has those little hallucinations especially when you ask it to figure out more niche tasks. But It’s really increased my productivity. Especially when getting a new repo setup. (I work with micro services)

Yeah, formatting is the only place that I really enjoy using AI. It’s great at pumping out blocks of stuff and frequently gets the general idea of what I’m going for with successive variables or tasks. But when you ask it to do complex things it wigs out. Like yesterday when it spit out a regex to look for something within multiple encapsulation chars just fine, but telling it to remove one of the chars it was looking for was impossible, apparently. Spent 5 min doing something I figured out in 2 minutes on a regex test site.

My workmate literally used copilot to fix a mistake in our websocket implementation today.

It made one line of change… turned it it made the problem worse

AI coding in a nutshell. It makes the easy stuff easier and the hard stuff harder by leading you down thirty incorrect paths before you toss it and figure it out yourself.

It’s almost like working with shitty engineers.

Shitty engineers that can do grunt work, don’t complain, don’t get distracted and are great at doing 90% of the documentation.

But yes. Still shitty engineers.

Great management consultants though.

I give instructions to AI like I would to a brand new junior programmer, and it gives me back code that’s usually better than a brand new junior programmer. It still needs tweaking, but it saves me a lot of time. The drawback is that coding knowledge atrophy occurs pretty rapidly, and I’m worried that I’m going to forget how to write code without the AI. I guess that I don’t really need to worry about that, since I doubt AI is going anywhere anytime soon.

I guess whether it’s worth it depends on whether you hate writing code or reading code the most.

Is there anyone who likes reading code more than writing it?

Probably a mathematician or physicist somewhere.

I hate reading the code I wrote two days ago.

Maybe if you wrote better code …

/Jk

I write better code everyday because yesterday code always looks bad haha.

Your hands and wrists must not hurt yet. You’ll eventually come to see writing code as tedium.

So what it’s really like is only having to do half the work?

Sounds good, reduced workload without some unrealistic expectation of computers doing everything for you.

So what it’s really like is only having to do half the work?

If it’s automating the interesting problem solving side of things and leaving just debugging code that one isn’t familiar with, I really don’t see value to humanity in such use cases. That’s really just making debugging more time consuming and removing the majority of fulfilling work in development (in ways that are likely harder to maintain and may be subject to future legal action for license violations). Better to let it do things that it actually does well and keep engaged programmers.

People who rely on this shit don’t know how to debug anything. They just copy some code, without fully understanding the library or the APIs or the semantics, and then they expect someone else to debug it for them.

We do a lot of real-time control software, and just yesterday we were taking about how the newer folks are really good at using available tools and libraries, but they have less understanding of what’s happening underneath and they have problems when those tools don’t/can’t do what we need.

I see the same thing with our newer folks. (And some older folks too.) and management seems to encourage it. Scary scary stuff. Because when something goes wrong there’s only a couple of people who can really figure it out. If I get hit by a bus or laid off, that’s going to be a big problem for them.

Yep, you get it. And it’s really hard to get people to understand the value in learning to do that stuff without the tools.

From my experience all the time (probably even more) it saves me is wasted on spotting bugs and the bugs are in very subtle places.

Code is the most in depth spec one can provide. Maybe someday we’ll be able to iterate just by verbally communicating and saying “no like this”, but it doesn’t seem like we’re quite there yet. But also, will that be productive?

From a person who does zero coding. It’s a godsend.

Makes sense. It’s like having your personal undergrad hobby coder. It may get something right here and there but for professional coding it’s still worse than the gold standard (googling Stackoverflow).

Nah, you just need to be really specific in the requirements you give it. And if the scope of work you’re asking for is too large you need to do the high level design and decompose it into multiple parts for chatgpt to implement.

If you were 100% specific you would be effectively writing the code yourself. But you don’t want that, so you’re not 100% specific, so it makes up the difference. The result will include an unspecified percentage of code that does not fit what you wanted.

It’s like code Yahtzee, you keep re-rolling this dice and that dice but never quite manage to get the exact combination you need.

There’s an old saying about computers, they don’t do what you want them to do, they do what you tell them to do. They can’t do what you don’t tell them to do.

I know zero coding and trying to query something in snowflake or big query is basically not accessible to me. This is basically a cheat code for me.