- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

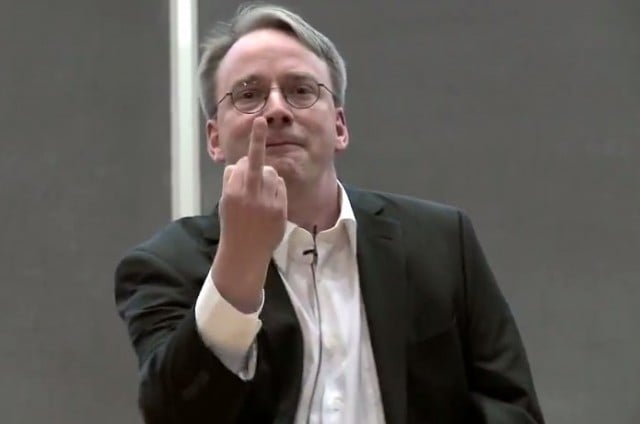

Who is that for? I would like to give the middle finger to 75% of those companies.

This is Linus Torvalds, creator, namesake, and supreme dictator of the Linux kernel. It’s from a video of him talking about his frustrations in working with NVIDIA. Essentially, NVIDIA treats Linux like a second-class citizen and its components don’t play nicely with the rest of the Linux code base. In this scene, Torvalds shows his middle finger and says “NVIDIA, fuck you!”.

linus torvalds(linux kernel) response to nvidia (who consistently treats linux as a nobody for consumers)

It’s far worse than just simply ignoring what their users and customers want.

Nvidia makes a shit ton of money off of Linux. Between what their Tegra chips were sold for and their data center products being used on Linux (most recently in the AI space), their company is practically built in its success in using Linux for all their backend and supercomputing research.

They are literally as successful as they are, from using Linux themselves, but they treat the community like shit. They take take take, and then they go and shit on the front lawn.

Their senior leadership is ethically bankrupt trash and can go fuck themselves.

Nvidia and Linux = Apple and open source, literary same story

Took long enough - at a certain point Nvidia’s pricing just to get CUDA doesn’t make sense when compared to the cost of just investing in ROCm and OneAPI.

All they had to do was find the right balance, but apparently they decided to see how much money the printer could make…

I keep hearing how good AI is at coding these days, why can’t they just use it to rewrite all the model and library code up to full AMD support?

/s

Large companies that are themselves (near) monopolies see the risk of only having one supplier. This should be evident to all spectators.

Nuh, free markut regulgates itself. Smol govment only way (except for suppressing the minorities).

I mean, this is kinda the free market at work? Nvidia built and dominated a market, and AMD and Intel are pouring billions in to give people an alternative which will drive prices down?

True, but this will also help boost alternatives to nvidia for consumers too I’d wager, and with that probably also better Linux support along with it.

I very much hope this is a success for AMD, because for years I’ve wanted to use their chips for analysis and frankly the software interface is so far behind Nvidia it’s ridiculous, and because of that none of the tools I want to use support it.

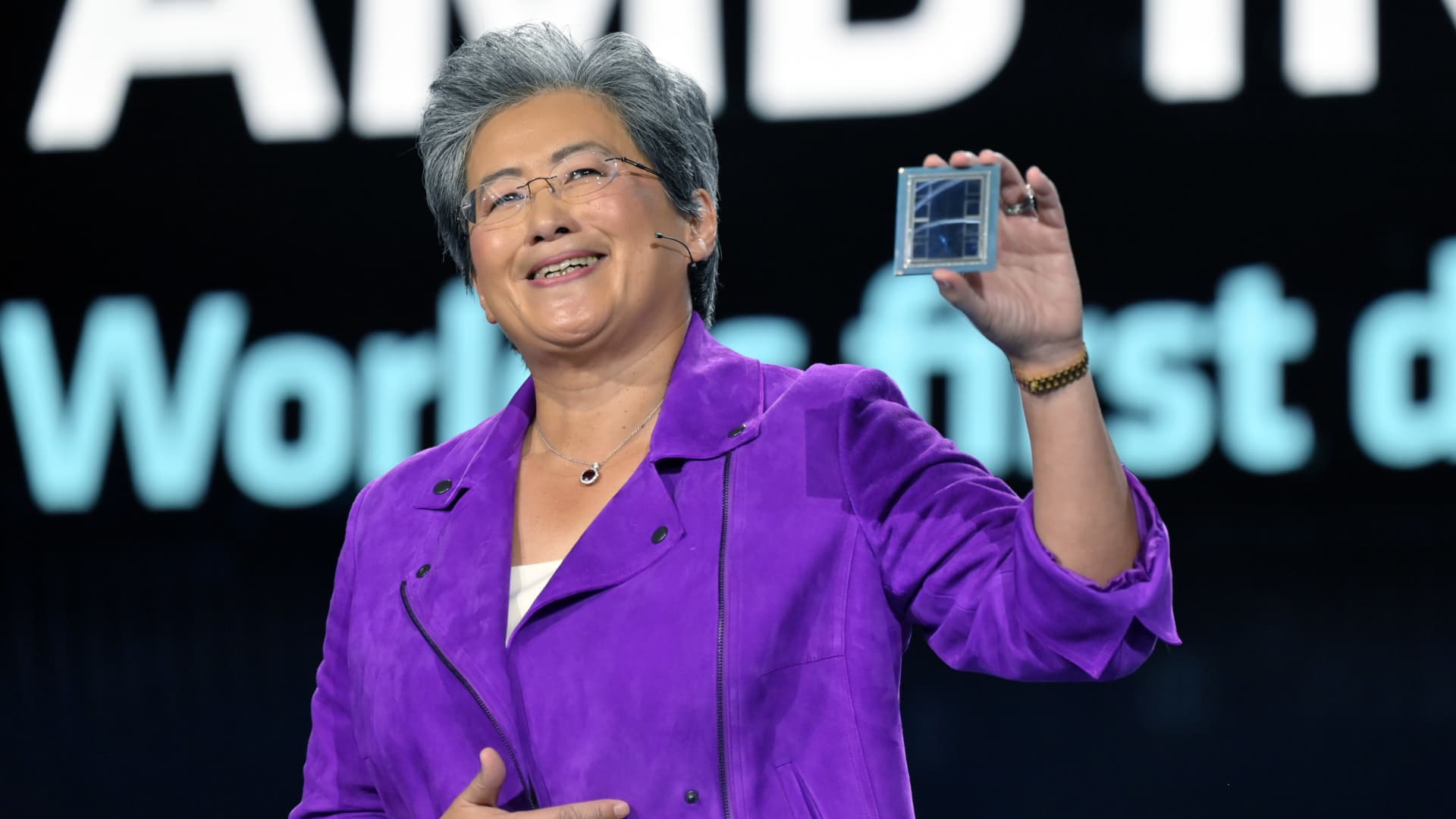

Attached is my review of AMD:

If we’re nitpicking about AMD: another thing I dislike about them is their smaller presence in the research space compared to their competitors. Both Intel and NVIDIA throw money into risky new ideas like crazy (NVM, DPUs, GPGPUs, P4, Frame Generation). Meanwhile, AMD seems to only hop in once a specific area is well established to have an existing market.

For consumer stuff, AMD is definitely my go-to. But it occurs to me that we need companies that are willing to fund research in Academia. Even if they don’t have a super good track record of getting profitable results.

Define software interface, because Adreneline as an interface is miles in modernity and responsiveness compared to nvidia control panel+geforce experience.

The word you are looking for is features, because the interface is not what AMD is behind on at all.

CUDA vs ROCm. Both support OpenCL which is meh.

I target GPU for mathematical simulations and calculations, not really gaming

Hence its a featureset. CUDA has a more in depth feature set because Nvidia is the leader and gets to dictate where compute goes, this in turn has a cyclical feedbackloop as devs use CUDA which locks them more and more into the ecosystem. Its a self inflicting problem till one bows out, and it wont be Nvidia.

It forces AMD to have to play catchup and write a wrapper that converts CUDA into OpenCL because the devs wont do it.

Ai is the interesting situation because when it came to the major libraries (e.g pytorch, tensorflow), they already have non Nvidia backends, and with microsofts desire to get AI compute to every pc, it makes more sense for them to partner with AMD/Intel due to the pc requiring a processor, while an nvidia gpu in the pc is not guaranteed. This caused more natural escape from requiring CUDA. If a project requires an Nvidia gpu, it rolls back that it was a small dev who programmed with CUDA for a feature and not the major library.

AMD didn’t even have a good/reliable implementation of OpenCL, which I would have liked to have succeed over CUDA.

Intel and AMD dropped the ball massively for like 15 years after Nvidia released CUDA. It wasn’t quiet either, CUDA was pushed all over the place even it came out.

I would kill to run my models on my own AMD linux server.

Does GPT4all not allow that? Or do you have specific other models?

I haven’t super looked into it but I’m not interested in playing the GPU game against the gamers so if AMD can do a Tesla equivalent with gobs of RAM and no display hardware I’d be all about it.

Right now it’s looking like I’m going to build a server with a pair of K80s off ebay for a hundred bucks which will give me 48GB of RAM to run models in.

Some of the LLMs it ships with are very reasonably sized and still be impressive. I can run them on a laptop with 32GB of RAM.

This is very interesting! Thanks for the link. I’ll dig into this when I manage to have some time.

if AMD can do a Tesla equivalent with gobs of RAM and no display hardware I’d be all about it.

That segment of the market is less price-sensitive than gamers, which is why Nvidia is demanding the prices that they are for it.

An Nvidia H100 will give you 80GB of VRAM, but you’ll pay $30,000 for it.

AMD competing with Nvidia in the sector more-strongly will improve pricing, but I doubt very much that it’s going to make compute cards cheaper than GPUs.

Besides, if you did wind up with compute cards being cheaper, you’d have gamers just rendering frames on compute cards and then using something else to push the image to the screen. I know that Linux can do that with PRIME, and I assume that Windows can as well. That’d cause their attempt to split the market by price to fail. Nah, they’re going to split things up by amount of VRAM on the card, not by whether there’s a video interface on it.

I suspect that a better option is to figure out ways to reasonably split up models to run on lower-VRAM GPUs in parallel.

Here’s an article about the chips for those uninterested in business crap: https://www.servethehome.com/amd-instinct-mi300x-gpu-and-mi300a-apus-launched-for-ai-era/

Thanks, I usually submit biz related stuff here, hardware related stuff to c/hardware@lemmy.ml. It’s not very active but I haven’t really found a good replacement to r/hardware on Lemmy.

I see.

On another note, the way you linked the sub doesn’t work for me, should be: !hardware@lemmy.ml

deleted by creator