The government says the project is at this stage for research only, but campaigners claim the data used would build bias into the predictions against minority-ethnic and poor people.

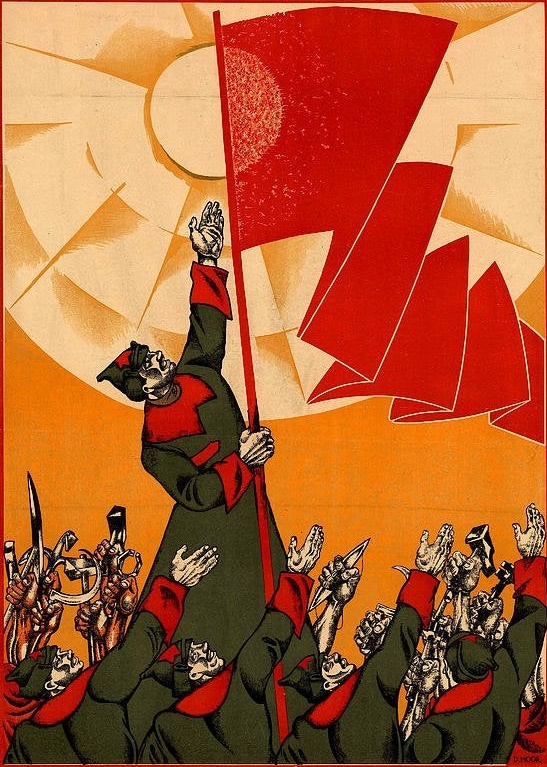

Minority Report. :kelly:

The AI model:

Philip K Dick: “Imagine a horrible dystopia where the state uses magical psychics that are almost always right to stop crime. That would suck and be bad even for you, an eager collaborator and true believer in that system, because they could be wrong about you too.”

The UK: “Oi chatgpt get yer robot calipers out and tell me if 'e’s got a loicence for that telly?”

It’s funny because the precog system is clearly correct almost fucking ALL THE TIME. But because it can be wrong once, it’s evil.

But they’re gonna defend the shit out of these AI solutions despite being totally untrustworthy and wrong nearly half the time.

All this will do is reproduce racial profiling, except because it’s performed by an AI it’s ok because a human didn’t do it. “My computer told me to stop and search you”.

Idk why they think algorithms trained on human bias wouldn’t have the same bias.

The algorithms having the same bias is the positive to them.

They get to do the racism but they also get to blame something that isn’t themselves. Something that can not be fired and is not accountable to anyone.

Yeah, that’s UK after all

It will 100% be implemented elsewhere too. American cops deporting people? “Sorry the machine says you gotta go, so sayeth the machine, not me, i’m just following the machine’s orders”

Authority will love this shit. They become completely unaccountable. The nebulous AI decides all.

The machine will put out the names of billionaires and insurance execs over and over to the point of glitching out. It will repeat “no ethical fjsjsifjvjdjsk under capitalism fjdjvicjsj” then crash.

It’ll put their names out repeatedly until it gets an exception coded to ignore them explicitly

The types of information processed includes names, dates of birth, gender and ethnicity

A section marked: “type of personal data to be shared” by police with the government includes various types of criminal convictions, but also listed is the age a person first appeared as a victim, including for domestic violence, and the age a person was when they first had contact with police.

Also to be shared – and listed under “special categories of personal data” - are “health markers which are expected to have significant predictive power”, such as data relating to mental health, addiction, suicide and vulnerability, and self-harm, as well as disability.

I didn’t think it was possible for me to hate a country this much. On the bright side, this will reduce trust in the police as people begin to catch on that literally having contact with the police could have you marked for life.

This data will be used to profile whether victims are later likely to become offenders, and at what age and under what circumstances that likelihood is.

This means that being a victim and going to the please is actively harmful to yourself in the future.

Illegal to say becomes illegal to think

Aren’t there whole treats themed after this, explicitly saying that it’s probably not a good idea?

UK creating ‘murder prediction’ tool to identify people most likely to kill

Get Tom Cruz on the horn!

Oh my god that gun is so stupid what happens if you don’t have the space to twirl it around lmao

Just constantly flagging yourself while your finger is constantly on the trigger. What could go wrong?

what happens if you don’t have the space to twirl it around lmao

Sick sticks!

Edit:

Oh my god that gun is so stupid

Lmao. That whole part is ridiculous, especially with how he just drives off in the car at the end, haha.