Big if true.

Even if it’s not true, it’s not surprising. And this is why centralized algorithmic control will never work.

Even if it’s not true, it’s not surprising.

This is a weird sentence. I honestly have no idea what it means.

Fair enough. The point is just that if it’s a lie, it’s completely believable and that’s a problem.

Looks like there’s a github link at the bottom of the page. Any techie people want to take a look and provide their take? This article raises a lot of red flags.

The docs give an example for a trump character, which is weird why they would do it, but people make choices.

But then I went to the GitHub project of Eliza and just searched for trump in the repo. Granted, this was only about 10 mins looking through the code with the trump keyword, but it definitely seems like everything is in place to have a trump-like ai. There is also a note that the trump bot doesn’t directly reply to questions but often diverts the conversation, so it was definitely tested.

That’s only the main branch, god knows what’s in the other branches, I’m sure if someone invests significant time, more info could be gathered. Regardless, the program advertises itself as a way to create bots for social media, so surely someone has used it.

It’s difficult to believe OP (I definitely wants to), but the software is concerning regardless.

Edit: I was a bit back and forth about the whole thing, but I feel like investigating the project definitely has some merit. I’m done for now, but I’d like to hear more opinions as well.

Actually, thinking more about it, it’s quite sinister. The characters they have available as examples are: c3po cosmosHelper Dobby eternalAi sbf trump

Of those, I (and I’m guessing most people) only know c3po, Dobby and Trump. And trump is the only known human model. Now let’s say you want to test the application (which you can from their website if you give them your chatgpt API token), then people are more likely to pick a character they know and so it’s likely to be one of those three. So just running the example with the trump model because you want to test it has already launched a chat bot that has a right leaning rhetoric.

I looked into the commit history and it doesn’t seem like there was a lot of activity before the US elections, which is weird since OP claims it was written for that purpose. Moreover, the trump character wasn’t commited until two weeks before the election.

Of course it’s entirely possible to fake the git history, but I don’t think it’s likely.

I’m trying to understand who the people are that are developing the software. I should probably make a burner twitter account so I can read more of their profiles…

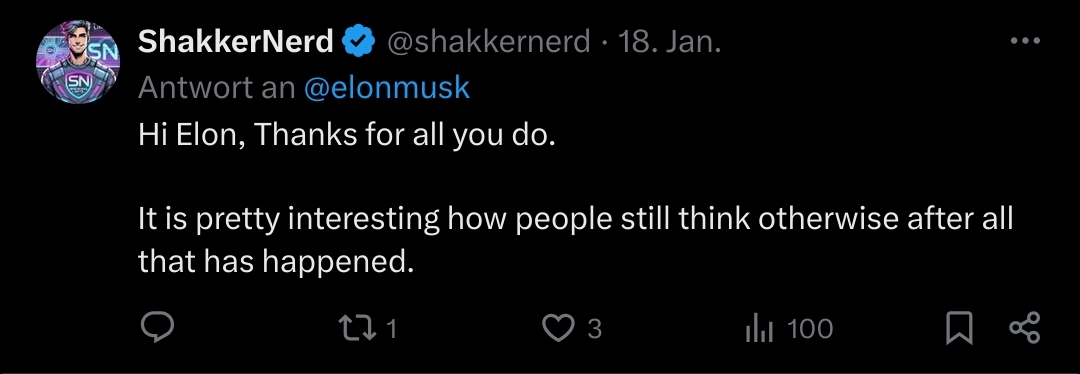

This is the second highest contributor

Not trying to start a witch hunt, there are plenty of Musk apologists, so this screenshot alone doesn’t prove anything.

I’m getting more confused about that person. On their GitHub, they have one repo which is a fork of the main Eliza project. Apart from that, their only contributions are to the Eliza project. Fair enough if they want to have a separate GitHub account just for that purpose. But then their twitter account seems to be some crypto fan /developer and Eliza is only mentioned once. Then where are the crypto projects on their GitHub?

It’s an example that is odd.

Itself, could be a meaningless example that someone made in poor taste.

It proves nothing empirically, but it’s not a normal example at all

Without proof this is just a mediocre fanfiction

Would no longer matter in these United States one whit. Godspeed, Europe.

If true, why now, why not 6 months ago when it mattered?

Have a hard time grasping such a thing would not have leaked. With evidence. If it was that widespread.

One of the most disturbing things we did was create thousands of fake accounts using advanced AI systems called Grok and Eliza.

It’s not true.

Eliza? Really!? Give me a break!