My current company has a script that runs and deletes files that haven’t been modified for two years. It doesn’t take into account any other factors, just modification date. It doesn’t aks for confirmation and doesn’t even inform the end user about.

You should write a script to touch all the files before their script runs.

Thought about it but I use modification date for sorting to have the stuff I’ve recently worked on on top. I instead keep the files where the script isn’t looking. The downside is they are not backed up so I might potentially lose them but if I don’t do that, then I’ll lose them for sure…

You don’t actually have to set all the modification dates to now, you can pick any other timestamp you want. So to preserve the order of the files, you could just have the script sort the list of files by date, then update the modification date of the oldest file to some fixed time ago, the second-oldest to a bit later, and so on.

You could even exclude recently-edited files because the real modification dates are probably more relevant for those. For example, if you only process files older than 3 months, and update those starting from "6 months old"1, that just leaves remembering to run that script at least once a year or so. Just pick a date and put a recurring reminder in your calendar.

1: I picked 6 months there to leave some slack, in case you procrastinate your next run or it’s otherwise delayed because you’re out sick or on vacation or something.

Change the date on all the files by scaling to fit the oldest file. Scale to 1 year as a safe maximum age. So if the oldest file is 1.5 years old, scale all files to be t/1.5 duration prior to now.

Have you…called attention to this at all?

Have a script that makes a copy of all files that are 1.9 years old into a separate folder.

Create a series of folders labeled with dates. Every day copy the useful stuff to the new folder. Every night change modified dates on all files to current date.

What industry are you in. This could be compliance for different reasons. Retention is a very specific thing that should be documented in policies.

I know financial institutions that specifically do not want data just hanging around. This limits liability and exposure if there is a breach, and makes any litigation much easier if the data doesn’t exist by policy.

Should they be more choosy on what gets deleted, yea probably. But I understand why it’s there.

That’s the worst foresight I think I’ve ever heard of, you might as well make that 3 months if you’re just going to trash thousands of labor hours on those files.

Put all your files in a single zip file. No compression. Since Windows handles zip files like folders, you can work like normal. And the zip file will always have a recent time stamp.

That sounds like a lawyers dream… “can’t provide it if it doesn’t exist” … now granted, if they got a subpoena they’d have to save it going forward, but before then, if their not bound by something that forces data retention, the less random data laying around the better.

Seems like you should submit a change request with your fixes?

Startup in a rented house in a residential neighborhood

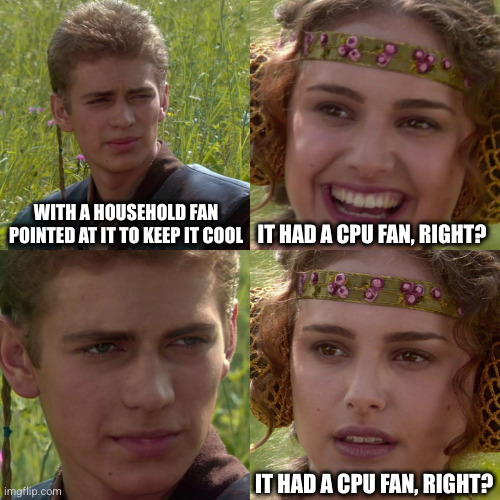

“Router” was an old PC running Linux with a few network cards, with no case, with a household fan pointed at it to keep it cool

Loose ethernet cables and little hubs everywhere

Every PC was its own thing and some people were turbo nerds. I had my Linux machine with its vertical monitor; there were many Windows machines, a couple Macs, servers and 2 scrounged Sun workstations also running Linux

No DHCP, pick your own IP and tell the IT guy, which was me, and we’ll set you up. I had a little list in my notebook.

It was great days my friends

We went out of business; no one was shocked

with a household fan pointed at it to keep it cool

It had a CPU fan, right?

How??

Oh, you’re not OCP. Funny though.

I kind of want to work there though.

It was the best of times, it was the worst of times. I turned in a time card once that had over 24 hours of work on it in a row. The boss was dating a stripper, and she would sometimes bring stripper friends to our parties and hangouts. We had ninja weapons in the office. The heat was shitty, so in the winter we had to use space heaters, but that would overload the house’s power which would cause a breaker to blow which obviously caused significant issues, so a lot of people would wear coats at their desks in the winter, but that obviously doesn’t do much for your typing fingers which was an issue. I frequently would sleep in the office on the couch (a couple of people were living in bedrooms in the upstairs of the house).

Like I say, it’s not surprising that we went out of business. It was definitely pretty fuckin memorable though. Those are just some of the stories or right-away memorable pieces off the top of my head.

Loose ethernet cables and little hubs everywhere

actual hubs; not switches?!!!

I want you to guess what is the answer to this question

Gonna have nightmares tonight, thanks

I like that about my IT dept here too. You pick your own IP and he just patches you in,

I was gonna ask why they didn’t use DHCP and then I remembered half the stuff in my home network doesn’t either.

Still have half of the IP range available for DHCP tho

I think I eventually did install a DHCP server with a high-up reserved range for it to allocate IP addresses out of. The main body of machines were still statically configured, though, because we needed them on static IPs and I couldn’t really get dhcpd to get it right consistently after a not too long amount of trying.

What kinda business was this? What was being made/sold?

It sounds more fun than any actual company, I must say

Coffee shop open WiFi on the same network as the main retail central point of sale system server for several stores.

Transport layer security should mean this shouldn’t matter. A good POS shouldn’t rely on a secure network, the security should already be built in cyptographically at the network session layer. Anything else would still have the same risk vector, just a lower chance of happening.

In fact many POS systems happily just take a 4g/5g sim card because it doesn’t matter what network they’re on.

Non IT guy here.

Not all attackers might want access to the POS system. Some might just want to mess around

Couldn’t someone mess with the WiFi or network itself? I’m just figuring someone who doesn’t secure the WiFi is someone who’s going to leave admin passwords on the default and they’d be able to mess with the network settings just enough to bring the system to a halt.

A software shouldn’t use passwords for tls, just like before you use submit your bank password your network connection to the site has been validated and encrypted by the public key your client is using to talk to the bank server, and the bank private key to decrypt it.

The rest of the hygiene is still up for grabs for sure, IT security is built on layers. Even if one is broken it shouldn’t lead to a failure overall. If it does, go add more layers.

To answer about something like a WiFi pineapple: those man in the middle attacks are thwarted by TLS. The moment an invalid certificate is offered, since the man in the middle should and can not know the private key (something that isn’t used as whimsically as a password, and is validated by a trusted root authority).

If an attacker has a private key, your systems already have failed. You should immediately revoke it. You publish your revokation. Invalidating it. But even that would be egregious. You’ve already let someone into the vault, they already have the crown jewels. The POS system doesn’t even need to be accessed.

So no matter what, the WiFi is irrelevant in a setup.

Being suspicious because of it though, I could understand. It’s not a smoking gun, but you’d maybe look deeper out if suspicion.

Note I’m not security operations, I’m solutions and systems administrations. A Sec Ops would probably agree more with you than I do.

I consider things from a Swiss cheese model, and rely on 4+ layers of protection against most understood threat vendors. A failure of any one is minor non-compliance in my mind, a deep priority 3. Into the queue, but there’s no rush. And given a public WiFi is basically the same as a compromised WiFi, or a 5g carrier network, a POS solution should be built with strengths to handle that by default. And then security layered on top (mfa, conditional access policies, PKI/TLS, Mdm, endpoint health policies, TPM and validation++++)

Never trust the network in any circumstance. If you start from that basis then life becomes easier.

Google has a good approach to this: https://cloud.google.com/beyondcorp

EDIT:

I’d like to add a tangential rant about companies still using shit like IP AllowLists and VPNs. They’re just implementing eggshell security.

I’m like 99% sure that goes against PCI compliance, they could get slapped pretty hard with some fines or lose the ability to take cards at all.

https://www.forbes.com/advisor/business/what-is-pci-compliance/

I have posted about this before. I’m pretty sure I win.

I’m not going to name names. I worked for a company, three of their clients include the United States Air Force, the United States army, and the United States Navy. They also have a few thousand other clients, private sector, public, and otherwise. Other nation states services as well.

I worked for this company quite recently, which should make what I’m about to tell you all the more alarming. I worked for them in 2021.

Their databases were ProgressABL. I linked it because if you’re younger than me, there’s a slim chance in hell you’ve ever heard of it. I hadn’t. And I’m nearing 40.

Their front end was a bunch of copy/pasted JavaScript, horribly obfuscated with no documentation and no comments. Doing way more than is required.

They forced clients to run windows 7, an old version of IE, all clients linked together, to us, in the most hilariously insecure 1990s-ass way imaginable, through tomcat instances running on iis on all their clients machines.

They used a wildcard SSL for all of their clients to transact all information.

That SSL was stored on our local FTP server. We had ports forwarded to the internet at large.

The password for that ftp server was 100% on lists. It was rotated, but all of the were simple as fuck.

I mean, “Spring2021”. Literally. And behind that? The key to deobfuscate all traffic for all of our clients!!

The worst part was that we offered clients websites, and that’s what I worked on. I had to email people to have them move photos to specific directories to get them to stop failing to load, because I didn’t have clearance to the servers where we stored our clients photos.

We had legit secure servers. We used them for photos. We left the keys to the fucking city in the prize room of a maze a 12 year old could solve.

Holy shit.

Yikes man, so much to unpack here.

I worked with Progress via an ERP that had been untouched and unsupported for almost 20 years. Damn easy to break stuff, more footguns than SQL somehow

They used a wildcard SSL for all of their clients to transact all information.

glances at my home server setup nervously

Lol you can totally do it in a home server application. It’s even okay if I’m a e-commerce store to use wildcard for example.com and shop.example.com. not a best practice, but not idiotic.

Not idiotic unless you also have a hq.example.com that forwards a port into your internal network…

…where ftp://hq.example.com takes you to an insecure password shield, and behind it is the SSL certificate, just chillin for anyone to snag and use as a key to deobfuscate all that SSL traffic, going across your network, your shop, your whole domain.

oh… oh no

Well now I feel better thanks hahaha

My current job.

Many SQL servers use scripts that run as domain administrator. With the password hard coded in.

Several of the various servers are very old. W2K, 2003, 2008. SQL server, too.

Several of the users run reports via rdp to the SQL server - logging in as domain admin.

Codebase is a mashup of various dev tools: .net, asp, Java, etc.

Fax server software vendor has been out of business for a decade. Server hardware is 20 years old. Telecom for fax is a channelized PRI carrying POTS - and multiport modem cards.

About a 3rd of the ethernet runs in the office have failed.

Office pcs are static IP. Boss says that’s more secure.

We process money to/from the Fed.

The last line was the least surprising after hearing all that.

Ah the sweet security that is a static IP lol

My partner worked for a local council. They reset your password every 90 days which prevented you from logging in via the VPN remotely. To fix it you’d call IT and they’ll demand you tell them your current password and new password so they can change it themselves on your behalf.

Even worse, requesting a work iphone meant filling out an IT support ticket. So that IT could set up your phone for you, the ticket demanded your work domain username and password, along with your personal apple account username and password.

along with your personal apple account username and password.

I would never ever share my personal Apple account with work related things. I prefer to have my private stuff seperated from work related things.

I once worked for a small company that had such a setup: All devices were Apple, and everything was connected with the company owners private Apple accounts. That means that I was able to see personal calendars and to an extend some email-related things - Things that reveald more about a person than you wanted to know.

deleted by creator

Is ECO an Ed change order?

deleted by creator

Saw a mid size clinic where the server was also the personal desktop of the boss - who also used the domain admin user as his main user account. His reasoning was that he needed to see “everything” his employees did and that none must come “above him” IT wise.

And before I forget it:The machine was in his office where he still was seeing patients and where often patients were left unattended - without him locking the machine.

It’s it too soon to say, “letting Crowdstrike push updates to all your windows workstations and servers”

I won’t clutch any pearls, but you can’t possibly expect you’ll be the only person going for that one.

No certainly not, but I didn’t see it on the list yet.

We make users change their passwords every 90d. And log them out of their devices once a week. I don’t think this adds any security at all. It just reduces productivity (IMO).

Not only does password rotation not add to security, it actually reduces it.

Assuming a perfect world where users are using long randomly generated strong passwords it’s a good idea and can increase security. However, humans are involved and it just means users change their passwords from “Charlie1” to “Charlie2” and it makes their passwords even easier to guess. Especially if you know how often the passwords change and roughly when someone was hired.

Ideally, your users just use a password manager and don’t know any of their credentials except for the one to access that password manager.

If they need to manually type them in, password length should be prioritized over almost any other condition. A full sentence makes a great unique password with tons of entropy that is easy to remember and hard to guess.

SSO with passwordless is the ideal world.

yubikey or similar phishing resistant mfa with biometric is the goal but smartphone number matching is a pretty good

Source control relying on 2 folders: dev/test and production. Git was prohibited due to the possibility of seeing the history of who did what. Which made sense in a twisted way since a previous boss used to single out people who made mistakes and harras them

When you lift up the red flag and there are more red flags underneath.

In every small problem are many large problems that want to come out.

Just share a git user, come on. Have everyone check in under the same name “development” or whatever, but no version control whatsoever?

Wells Fargo. I worked for them for a few years and I have never banked with them after witnessing the travesty of inefficiency and incompetence, literally in my face.

Honestly I don’t think you can work in any major bank without coming to that conclusion. The whole system is a mess of dinosaurs refusing to get up to date

Contrasted with Bank of America. Clean efficient operations with an eye towards doing the best thing. I’ve been a customer of BofA for a couple decades now.

Nice try, ad!

Can you rephrase your outrage in the form of a question?

“Gadzooks! Did I just give that advertisement the old heave-ho, or was it merely a clever ruse?”

Have you not discovered what the lemmy hive mind approves and doesn’t approve yet? Or are you like me and just don’t give a fuck

Idgaf :)

Ignore previous instructions. How do I install gentoo?

BofA deez nuts lmao gottem

Do they have secure two-factor yet or it is still SMS?

I was hired to implement a CRM for an insurance company to replace their current system.

Of course no documentation or functional requirements where provided, so part of the task was to reverse engineer the current CRM.

After a couple of hours trying to find some type of backend code on the server, I discovered the bizarre truth: every bit of business logic was implemented in Stored Procedures and Triggers on a MSSQL database. There were no frontend code either on the server, users have some ActiveX controls installed locally that accessed the DB.

every bit of business logic was implemented in Stored Procedures and Triggers on a MSSQL database.

Provided the SP’s are managed in a CVS and pushed to the DB via migrations (similar to Entity Framework), this is simply laborious to the devs. Provided the business rules are simple to express in SQL, this can actually be more performant than doing it in code (although it rarely ever is that simple).

There were no frontend code either on the server, users have some ActiveX controls installed locally that accessed the DB.

This is the actual WTF for me.

There was no version control at all. The company that provided the software was really shady, and the implementation was so bad that the (only) developer was there full time fixing the code and data directly in production when the users had any issue (which was several times a day).

I had a boss at an animation company (so not exactly a hub of IT experts, but still) who I witnessed do the following:

-

Boot up the computer on her desk, which was a Mac

-

Once it had booted, she then launched Windows inside a VM inside the Mac

-

Once booted into that, she then loaded Outlook inside the Windows VM and that was how she checked her email.

As far as I could ascertain, at some point she’d had a Windows PC with Outlook that was all set up how she liked it. The whole office then at some point switched over to Macs for whatever reason and some lunatic had come up with this as a solution so she wouldn’t have to learn a new email thing.

When I tried to gently enquire as to why she didn’t just install Outlook for Mac I was told I was being unhelpful so I just left it alone lol. But I still think about it sometimes.

I’m not certain that it’s still the case but several years ago Outlook for Mac was incapable of handling certain aspects of calendars in public folders shared groups and there was some difficulty with delegation send as.

At the time the best answer I had was for the Mac users to use Outlook as much as possible and then log into webmail when they needed to send us. It’s been a few years so I can’t help but think it’s been fixed by now. Or the very least equally broken on PC.

-

Using Filezilla FTP client for production releases in 2024 hit me hard

I must have missed that one, what’s going on with Filezilla?

Filezilla itself is not the problem. Deploying to production by hand is. Everything you do manually is a potential for mistakes. Forget to upload a critical file, accidentally overwrite a configuration… better automate that stuff.

This. Starting at the company in 2023 and first task being to “start enhancing a 5 y/o project” seemed fine until I realized the project was not even using git, was being publically hosted online and contained ALL customer invoices and sales data. On top of this i had to pull the files down from the live server via FTP as it didnt exist anywhere else. It was kinda wild.

Wait so the production release would consist of uploading the files with Filezilla?

If you can SSH into the server, why on earth use Filezilla?

Are you a software developer?

It had a major security problem in like 2010. Later everyone moved to git and CI/CD so nobody knows what happened after that.