Thanks! It seems the markdown is saved and federated out correctly, so lemmy’s markdown to html is parsing it as intended. What is going wrong is piefed’s markdown to html parsing in this specific case.

wjs018

- 15 Posts

- 72 Comments

Wait, so what is that issue? If you bold something in two different places it gets messed up?

Edit: it sure does…I will take a look at that when I look at formatting comment previews correctly.

I don’t know enough about how the JS works to say whether the VPN would cause it, but @innermeerkat@jlai.lu was already working on this because their browser was messing it up and insisting they lived in Reykjavik. So, it clearly isn’t such a rare case that we can ignore it. We think it might be a privacy feature of some browsers that they don’t want to give away a tz.

On piefed

They both appear stickied just fine to me. Something that is different with sticky posts on piefed is that if you sort by

New, then the sticky posts are no longer at the top of the page. That is different than how lemmy does it. This has led to confusion in the past, and even has a codeberg issue about it.On lemmy

I am guessing that federation had not yet been established between that lemmy instance when you created and stickied that initial welcome post. I just tried an experiment now where I subscribed to your community from a test lemmy instance I have and forced federation of one of the sticky posts, and it didn’t come over stickied. So, there might be some weirdness there.

What you can do to try to resolve this is to unsticky it and then resticky it. That should send out the correct actions via activitypub so that the other instances know that the post should be stickied. To be on the safer side, give it a minute or two between those two actions so that you are sure the first action had been sent out fully.

The height of notifications does vary a bit depending on the type of notification. Some of the admin notifications in particular are a bit taller than the others, but I did try to tame them. The nice thing about how the notifications were re-architected by Jolly is that each one is a separate template file. So, if we want to tweak a specific type of notification, it is easier to do now compared to before.

Raw markdown in the notification is good feedback. This isn’t something I had tested when working on how these display, I can take a look at this.

3·7 days ago

3·7 days agoI could see this maybe being useful at a moderator or admin level to aid in moderation decisions, but I feel like the average user wouldn’t really gain much from this information in exchange for a more complicated UI.

Just as an aside, in the dev chat we have been talking about this a lot and the UI has been one of the harder pieces. The main competing paradigm for the UI of this feature is to have two upvote and two downvote buttons (one set for public votes and another for local-only votes). Here is a mockup I made of the voting buttons for that:

4·7 days ago

4·7 days agoJust to be clear, this feature doesn’t prevent federated votes coming in from other instances, it just prevents your vote from federating out unless you want it to. So, brigading from other instances could still happen (barring the use of any of the other tools available to a piefed admin).

Piefed (and lemmy I believe) do have options to have a local-only community though. That community is only interactable by local members of that instance, which would prevent the brigading scenario. This is set on a per-community basis though.

New notification formats are live now with a bonus mark read/unread button. Feel free to provide feedback either here or codeberg.

I am working on redoing the notifications page a bit. You can see a preview of what it will look like and provide feedback here. It will probably be a day or two for me to finish updating the templates for all the different notification types.

Just one of the limitations of the fediverse as it exists today. FWIW, lemmy works the exact same way. In its case, you pop in the url of a post you want to fetch in its search bar and it will find it and bring it over. When I have seen people confused about lemmy/mbin/piefed in the past, federation weirdness like this is one of the primary pain points.

As mentioned, if you subscribe to the community, everything going forward will federate over just fine. If there are specific posts you want to force piefed to fetch for you, then you can do so.

On the sidebar for the community, under where the mods are listed, select “Retrieve a post from the original server.” That let’s you pop in a link to the post from the lemmy server and it will make piefed bring it over. It won’t bring the comments though.

There’s nothing wrong with posting here, not everybody has a codeberg account or has any idea how to report an issue.

I actually don’t think this has an existing issue on codeberg since it is so new, but we have been talking about it in the dev chat room. I am sure it is going to be addressed soon.

2·8 days ago

2·8 days agoThanks! I honestly have no idea what is going on, but the fact that it hasn’t repeated at all makes me think that this might be tough to figure out. If you do see it happen again, please point it out so that we can better track down what might be a common root cause.

1·8 days ago

1·8 days agoOk, I went back through your post history and found the post in question. I can confirm that something is seemingly broken with that post, though I am not exactly sure what. Did you make this post via the website or in an app?

@rimu@piefed.social - Looking at the page source, there are

<em>tags all over the place, even when they are not included in the template files. No idea what could be going on. Post in question.

3·8 days ago

3·8 days agoHmm, a couple questions then:

- What theme are you using? Try changing your theme and see if that helps.

- Is it the whole site (piefed.social), or just that one post?

- If it is the whole site, does the same thing happen when you view content on another piefed instance, like feddit.online?

2·9 days ago

2·9 days agoThis has happened to me on lemmy a handful of times. Usually I can just hard refresh (ctrl + f5) to fix it. I think it is just that the css isn’t loaded.

3·9 days ago

3·9 days agoIIRC it is intentional that world isn’t there to help spread out users to other instances.

2·14 days ago

2·14 days agoThis is not currently possible in piefed. However, it is a good idea and a feature of lemmy that I have used on occasion. I think this only really makes sense when making a “Link” post in piefed parlance.

I created a codeberg issue for this.

3·20 days ago

3·20 days agoIs this where that happens? I am having a hard time untangling all the AP stuff in the codebase.

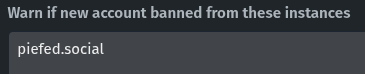

For what it’s worth, I don’t think this is the case any longer. I have been spinning up new dev instances a ton with docker and the trusted instances list is empty. The one place that piefed.social is listed in the admin panels I found is in the “Warn if new account banned from these instances” box:

I have been on a bit of a mission to try to make a lot of the more opinionated moderation tools in piefed optional at an admin level or remove them (so far rimu has been receptive). So, if this is in the code, I would want to make a PR to remove it.

The user that created the community is treated differently in that they cannot be removed by the other moderators. I belive that user is referred to as the owner in the code. I haven’t looked into it yet, but I think there is a way to transfer ownership of the community. If not, then there should be.

As far as I know, that is really the only difference between the owner and the other community moderators.