I really like the “Feeds” feature of PieFed, it allows anyone to combine all of the different communities into a single feed. It really makes browsing something like “Technology” a lot better.

I really like the “Feeds” feature of PieFed, it allows anyone to combine all of the different communities into a single feed. It really makes browsing something like “Technology” a lot better.

Based on the uptick in “I was banned from Reddit” posts, I’m thinking that we’re getting a lot more users that were banned for good reason from Reddit. Looks like Reddit has also stepped up their game in their ability to keep those users off their platform.

Ikidd updated their comment, it’s only a 7B model.

Last I heard from her was back around the beginning of April.

It’s not sending the audio to an unknown server. It’s all local. From the article:

The system then translates the speech and maintains the expressive qualities and volume of each speaker’s voice while running on a device, such mobile devices with an Apple M2 chip like laptops and Apple Vision Pro. (The team avoided using cloud computing because of the privacy concerns with voice cloning.)

Looks like they hadn’t even finished fixing it up after the previous whomping it received.

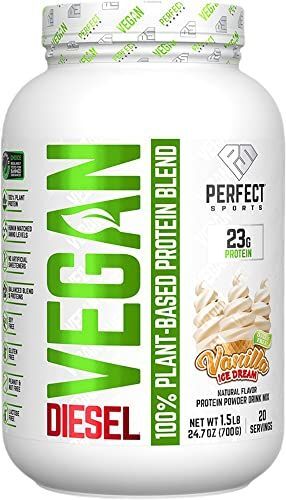

That’s not AI, that’s just a bad Photoshop/InDesign job where they layered the text underneath the image of the coupon with Protein bottles. The image has a white background, if it had a clear background there would have been no issue.

Edit: Looking a little closer, it looks more like some barely off-white arrow was at the top of the coupon image.

Edit2: if you’re talking about the text that looks like a prompt, it could be a prompt, or it could be a description of what they wanted someone to put on the poster. The image itself doesn’t look like AI considering those products actually exist and AI usually doesn’t do so well on small text when you zoom in on a picture.

Highlighting the main issue here (from the article):

“This means that it is possible for the WhatsApp server to add new members to a group,” Martin R. Albrecht, a researcher at King’s College in London, wrote in an email. “A correct client—like the official clients—will display this change but will not prevent it. Thus, any group chat that does not verify who has been added to the chat can potentially have their messages read.”

Google sells it as an updated extension framework to improve security, privacy, and performance of extensions… But it also nerfs adblockers ability to block all ads.

There are some forks from chrome that haven’t implemented the new manifest thing. So if you really need to, look for those.

I’ll take these over something that attempts to “dub” what they’re saying.

Some cool tech that’s likely coming about because of AI/ML. Thanks for sharing!

Unless their company has enterprise m365 accounts and copilot is part of the plan.

Or if they’re running a local model.

Looks like they’re finally cleaning up a bunch of junk.

In July 2024, Google announced it would raise the minimum quality requirements for apps, which may have impacted the number of available Play Store app listings.

Instead of only banning broken apps that crashed, wouldn’t install, or run properly, the company said it would begin banning apps that demonstrated “limited functionality and content.” That included static apps without app-specific features, such as text-only apps or PDF file apps. It also included apps that provided little content, like those that only offered a single wallpaper. Additionally, Google banned apps that were designed to do nothing or have no function, which may have been tests or other abandoned developer efforts.

Looks like the research was only looking at Denmark:

economists Anders Humlum and Emilie Vestergaard looked at the labor market impact of AI chatbots on 11 occupations, covering 25,000 workers and 7,000 workplaces in Denmark in 2023 and 2024.

Nice! The main character was fairly consistent across the scenes. Still some improvements to be made, but keep up the great work!

I think you’re in the wrong community…

Its probably better this way.

Otherwise you end up with people accusing movies of using AI when they didn’t.

And then there’s the question of how you decide where to draw the line for what’s considered AI as well as how much of it was used to help with the end result.

Did you use AI for storyboarding, but no diffusion tools were used in the end product?

Did one of the writers use ChatGPT for brainstorming some ideas but nothing was copy/pasted from directly?

Did they use a speech to text model to help create the subtitles in different languages, but then double checked all the work with translators?

Etc.

You can use something like Anything LLM for RAG:

https://github.com/Mintplex-Labs/anything-llm

It works with local models.

https://docs.anythingllm.com/agent/usage#what-is-rag-search-and-how-to-use-it

Oh man, the times I could have used this. Thanks!

The image for #3 isn’t showing up for me.

“environmentally damaging”

I see a lot of users on here saying this when talking about any use case for AI without actually doing any sort of comparison.

In some cases, AI absolutely uses more energy than an alternative, but you really need to break it down and it’s not a simple thing to apply to every case.

For instance: using an AI visual detection model hooked up to a camera to detect when rain droplets are hitting the windshield of a car. A completely wasteful example. In comparison you could just use a small laser that pulses every now and then and measures the diffraction to tell when water is on the windshield. The laser uses far less electricity and has been working just fine as they are currently used today.

Compare that to enabling DLSS in a video game where NVIDIA uses multiple AI models to improve performance. As long as you cap the framerates, the additional frame generation, upscaling, etc. will actually conserve electricity as your hardware is no longer working as hard to process and render the graphics (especially if you’re playing on a 4k monitor).

Looking at Wikipedia’s use case, how long would it take for users to go through and create a summary or a “simple.wikipedia” page for every article? How much electricity would that use? Compare that to running everything through an LLM once and quickly generating a summary (which is a use case where LLMs actually excel at). It’s honestly not that simple either because we would also have to consider how often these summaries are being regenerated. Is it every time someone makes a minor edit to a page? Is it every few days/weeks after multiple edits have been made? Etc.

Then you also have to consider, even if a particular use case uses more electricity, does it actually save time? And is the time saved worth the extra cost in electricity? And how was that electricity generated anyway? Was it generated using solar, coal, gas, wind, nuclear, hydro, or geothermal means?

Edit: typo